Setting up network for e-Business Suite in Oracle Cloud Infrastructure

This post will be on how we did setup our network when the requirement was to deploy one Exadata and several application servers for our test environments. I’ll go through each components and then what was the setup on Terraform to get everything up and running properly. I won’t go through compartments or IAM setup but focus on networking side and how it was build with Terraform

Key thing to remember is that since we don’t have any requirements to access system from public internet the whole network will be private and the only way to login is through corporate network and IPSec VPN or FastConnect.

Components we need are:

- Virtual Cloud Network – VCN which defines the CIDR block you will use and will have necessary network components running under it. In this example I’ve given /16 CIDR block for VCN.

- Three subnets under VCN – Two are required for Exadata and one is used for applications. Each subnet has a /24 CIDR block

- Route table – Route table will have all the necessary routes so subnets can connect to correct parts of your setup. In the route table which is attached to subnets we have route to DRG (our on-prem static route), Nat GW (for all outgoing traffic) and Service Gateway (for all traffic going to Object Storage)

- Nat Gateway – Instead of using a NAT server we can now use a NAT Gateway to route traffic outside in cases we need it. NAT GW is then added as a route rule to your route table

- Service Gateway – This will route traffic from our subnets to object storage without traffic going through public internet, backups and anything else

- Dynamic Routing Gateway with an IPSec VPN connection – DRG routes traffic between OCI and on-premise and IPSec connection then defines routes to your on-premise location

- Customer Premise Equipment – CPE is linked to DRG and will be used to setup the IPSec VPN connection. It has IP of your on-premise network equipment.

- Security lists for subnets to control ingress and egress traffic – we allow all traffic between subnets. When you are running Exadata you need to at least allow this inside the subnets.

How the network setup looks like

We wanted to keep the configuration really simple as running our e-Business Suite doesn’t need complex setup. Depending on your use case you might need to drop out NAT Gateway for example if there are strict rules on connecting outside the company’s network. What is not seen in this picture is that all ICMP, TCP and UDP traffic is allowed between subnets and only SSH and SQL*net traffic allowed to our company’s network.

Terraform setup

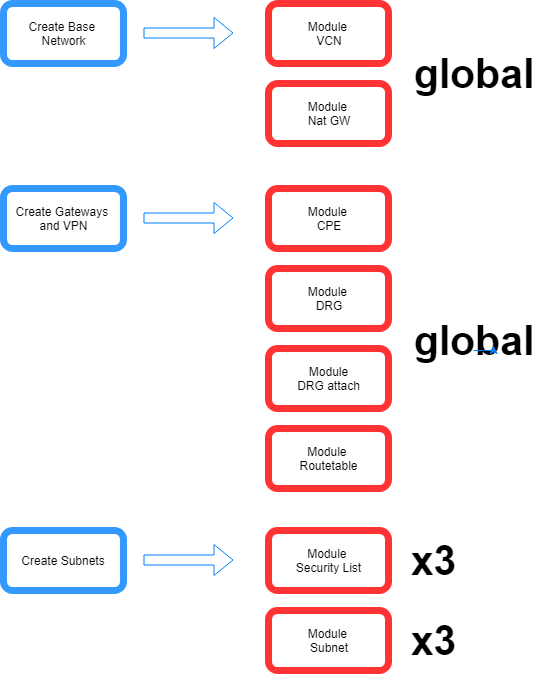

As I’ve previously mentioned we use Terraform to deploy services with Infrastructure as Code. We utilize modules with Terraform so resource creation is always done using same way and specici version of code.

We also divide the service creation parts to global and to other services which have dev/pprd/prod versioning. This way when making changes we test each change before changing the production stack and can hopefully automate this in the future.

Global parts are something which doesn’t make sense to create more than once. We could obviously do that but since we need to have IPSec VPN routing from our VCN to on-premise network we thought it will be easier to create components like VCN, IPSec VPN and FastConnect only once. In these cases you just need to acknowledge your approach and remember there is higher risk on deploying changes.

This is how Terraform projects we have related to network have been setup:

Looking this later we probably should have combined VCN and gateway projects. There is no good reason for them to be separate as both create global resources and we don’t really modify VCN so it would impact all resources below it.

We also call security list and subnet modules three times from the Create Subnets projects as we need three subnets with three security lists.

Word about modules

When we started we put all Terraform modules into one git (Gitlab) repository. While this worked we stumbled a lot on issues with merging when we were creating new modules each day.

I had a really good discussion with one of the largest Finnish AWS customers and how they utilize Terraform. There I got the idea that each module should instead be in it’s own repository. We changed our approach and I think it has been really good fit for us! It’s much clearer and we can tag each module with specific version. Having them in same repository would cause tags to be non-related to the specific module.

So now we can call module from the main.tf using a specific version like this:

source = “git::https://gitlab.my.company/OCI/module-securitylist.git?ref=v1.1” Remember that ref can be tag or branch depending which code base you want to use.

What we are still missing

You probably noticed we are still missing few things if you look the first picture and compare that with Terraform modules being used.

We are still in process of deploying FastConnect and I think it will be one of the few cases we will deploy outside Terraform and do it manually. Also the service gateway module is being build but it’s really a simple addition overall and shouldn’t be anything too difficult to add.

Hope this gives a good overview on planning your OCI network setup!