I’ve been using Oracle’s Zero Downtime Migration (ZDM) tool lately, and given there are still some limitations on cloning your database in OCI, I was thinking could we use ZDM for this?

Short answer – for sure!

If you want to use native OCI capabilities for cloning your database, you are limited on restoring the database on the same Availability Domain and using similar shapes, for PDBs you now have option to do cloning automatically which is nice, but for whole database the options are still limited.

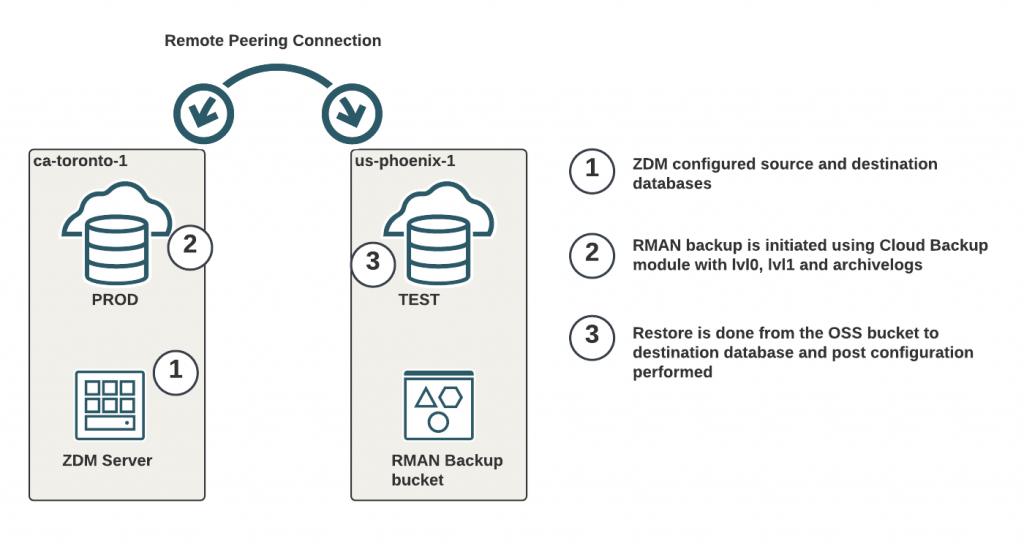

In this post I will focus on Physical Offline Migration for Oracle Database Cloud Services (DBCS) and my source will be another DBCS in another OCI Region as described below.

The beauty of ZDM in my opinion is, it handles all the OCI related work and setup automatically which normally you have to find out and do manually. This is a tool which is supported and under development by Oracle, so features are getting constantly added.

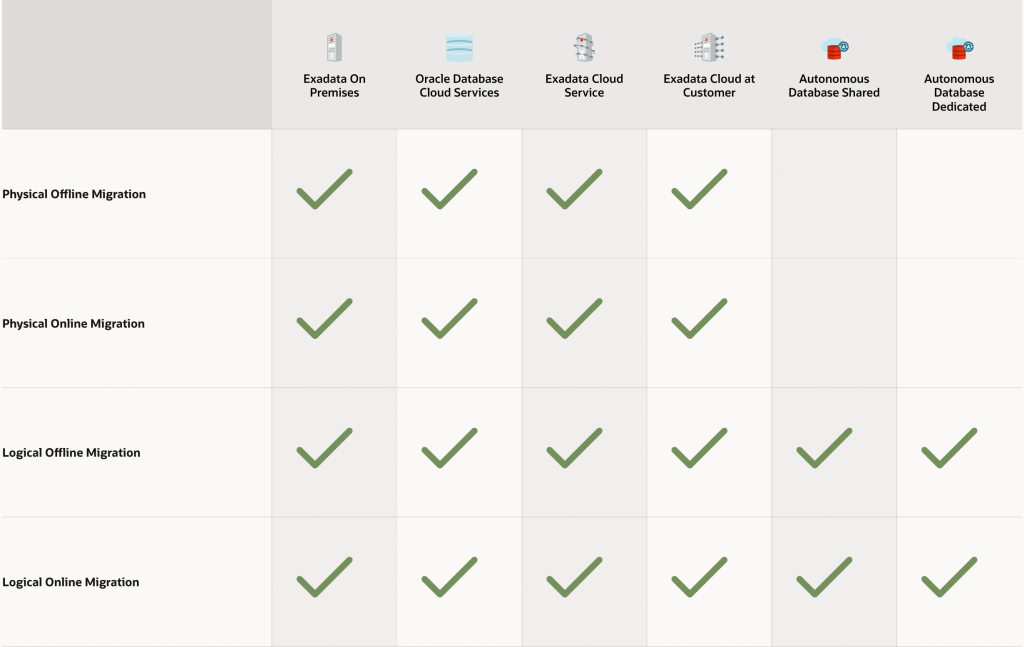

I copied next picture from ZDM home page which shows available targets and supported migration methods. As you can see, basically all OCI platforms are supported with multiple methods.

Initial Setup

I have my “PROD” database running in ca-toronto-1 and my “TEST” destination database provisioned in us-phoenix-1. The management server (just a normal VM) where I’ve installed ZDM is running in ca-toronto-1.

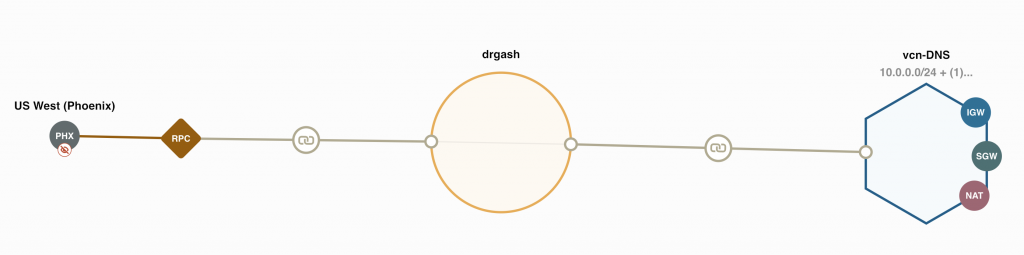

Let’s talk about the network first! There needs to be connectivity from the management server to both of the database servers, for that I’ve setup Remote Peering Connection through the Dynamic Routing Gateway in OCI and configured routing. I can access both servers with their short name, FQDN and IP address. For this test, I’ve just added the necessary lines to /etc/hosts in the ZDM server so it can resolve the addresses, one could think of using OCI Private DNS also for cross-region DNS so you wouldn’t rely on hosts files.

I’ve also setup ssh keys as per ZDM instructions, so I can login to both servers without defining key.

ZDM instructions are really clear and I don’t want to paste them here, best is to follow what they have written there to have configuration done properly! You can download ZDM from here and then transfer it to the server where you want to install it.

I’ve created Object Storage bucket in the destination us-phoenix-1 region where my backups will be stored. ZDM uses Swift Object Storage URL, so if you plan on not having the bucket in root of your tenancy, you will need to change the designated bucket location from your tenancy’s settings.

This is one of the limitations I feel ZDM has, when you have to use the Swift compartment, either you put all your backups in specific OCI compartment, or you would need to change designated compartment always when you want to use different compartment.

Response file configuration

ZDM has also a response file which you will need to configure before you kick things off, it has a lot of different variables but depending on your requirement, you might not need to configure too many variables.

You can basically create your own file from the template file zdm_template.rsp and when you start running ZDM, you just point to correct template.

#------------------------------------------#

TGT_DB_UNIQUE_NAME=TEST_phx17v

#------------------------------------------#

## Migration Method

## -----------------------------------------#

# Allowed values are

# ONLINE_PHYSICAL

# OFFLINE_PHYSICAL

#------------------------------------------#

MIGRATION_METHOD=OFFLINE_PHYSICAL

#------------------------------------------#

DATA_TRANSFER_MEDIUM=OSS

#------------------------------------------#

Platform Type

# -----------------------------------------#

PLATFORM_TYPE=VMDB

#------------------------------------------#

# Database Backup Cloud Service Properties #

#------------------------------------------#

HOST=https://swiftobjectstorage.us-phoenix-1.oraclecloud.com/v1/yzzlzrqnfjpi

OPC_CONTAINER=zdmbackup_swift

For my test, I’ve created response file with above variables only, all others I’m using default values or defining them during execution!

Additionally, you can configure your log files to go into a pre-authenticated Object Storage bucket which is handy.

Running ZDM

Once you’ve done the pre-requisites and setup and you have ZDM service running, you can use zdmcli to execute the migration/cloning.

./zdmcli migrate database -sourcedb PROD_yyz1c4 -sourcenode prod -targetnode test -backupuser "oracleidentitycloudservice/tfg@tfg.com" -rsp /home/zdmuser/app/zdm/rhp/zdm/template/zdm_cloning.rsp -tgtauth zdmauth -tgtarg1 user:opc -tgtarg2 identity_file:/home/zdmuser/.ssh/id_rsa -tgtarg3 sudo_location:/usr/bin/sudo -srcauth zdmauth -srcarg1 user:opc -srcarg2 identity_file:/home/zdmuser/.ssh/id_rsa -srcarg3 sudo_location:/usr/bin/sudo

Enter source database PROD_yyz1c4 SYS password:

Enter user "oracleidentitycloudservice/tfg@tfg.com" password:

I’m defining mainly the source database details and login info for both servers, everything else ZDM discovers automatically. You will need to supply source DB sys password and the authentication token of your user, remember it’s not the password but token you need to create for your user!

Once migration job is running (in the background), you can check the progress with zdmcli query job -jobid <my job id> or by looking the log file. Everything is logged, so you can see progress easily and what step is being executed. Few examples below from log file.

bastion: 2022-03-19T13:28:49.952Z : Executing phase ZDM_SETUP_TGT

bastion: 2022-03-19T13:28:49.953Z : Setting up ZDM on the target node test ...

test: 2022-03-19T13:40:29.976Z : TNS aliases successfully setup on the target node test...

bastion: 2022-03-19T13:40:30.076Z : Execution of phase ZDM_SETUP_TGT completed

####################################################################

bastion: 2022-03-19T13:40:32.345Z : Executing phase ZDM_OBC_INST_SRC

bastion: 2022-03-19T13:40:32.371Z : Installing Oracle Cloud Backup module on the source node prod

...

prod: 2022-03-19T13:40:46.072Z : Validating object store credentials..

prod: 2022-03-19T13:41:06.963Z : Backup recovery medium configuration check successful...

prod: 2022-03-19T13:41:07.010Z : OSS cloud backup module installed successfully.

bastion: 2022-03-19T13:41:07.078Z : Execution of phase ZDM_OBC_INST_SRC completed

bastion: 2022-03-19T13:42:56.550Z : Taking full backup on the source node prod ...

prod: 2022-03-19T13:43:18.444Z : Source database "PROD_yyz1c4" credentials exported successfully o

n node "prod"

prod: 2022-03-19T13:43:42.665Z : Setting initialization parameter control_file_record_keep_time to

60 for database PROD_yyz1c4 ...

prod: 2022-03-19T13:43:45.174Z : Executing full backup of database "PROD_yyz1c4".

prod: 2022-03-19T13:49:31.670Z : Retrieving RMAN backup statistics ...

prod: 2022-03-19T13:49:32.377Z : START TIME END TIME BACKUPTIME(MINS) INPUT(GB)

OUTPUT(GB) OUTPUT BYTES/SEC

prod: 2022-03-19T13:49:32.383Z : -----------------------------------------------------------------

-------------------------

prod: 2022-03-19T13:49:32.388Z : 2022-03-19T13:47:30Z 2022-03-19T13:49:18Z 1.42

3.77 .02 .67M

prod: 2022-03-19T13:49:33.196Z : Successfully executed incremental backup of database "PROD_yyz1c4

".

test: 2022-03-19T13:52:37.765Z : Dropping database TEST_phx17v ...

test: 2022-03-19T13:54:53.873Z : database TEST_phx17v dropped successfully

test: 2022-03-19T13:56:21.076Z : Target database "TEST_phx17v" credentials staged successfully on

node "test"

test: 2022-03-19T13:56:36.665Z : Registering database "TEST_phx17v" as a cluster resource...

test: 2022-03-19T13:56:38.677Z : Restoring SPFILE ...

test: 2022-03-19T13:57:31.750Z : SPFILE restored to +DATA/TEST_PHX17V/spfileTEST_phx17v.ora succes

sfully

test: 2022-03-19T13:57:48.614Z : Restoring control files ...

test: 2022-03-19T14:18:59.718Z : Executing post database migration actions at the target ...

test: 2022-03-19T14:19:01.425Z : Verifying Transparent Data Encryption (TDE) keystore consistency

...

test: 2022-03-19T14:19:03.233Z : Transparent Data Encryption (TDE) keystore verified successfully

test: 2022-03-19T14:19:03.244Z : Post database migration actions at the target executed successful

ly

My opinion, Oracle did great job here! You can see different steps, how long backup took, which server each step is being run on (prod, test, bastion) etc. Think of setting this up your own and getting same level of logging available? It’s a lot of work to get to same level and you have to think you would need to do it so backend tooling of OCI supports it as well.

Validating the clone

The whole process with a dummy database took around 1 hour to complete which involved taking the backup to another OCI Region and restoring the database. There are a lot of validations and setup being done by ZDM, but if they add overhead of ~20-30 minutes, I wouldn’t be overly concerned on timing.

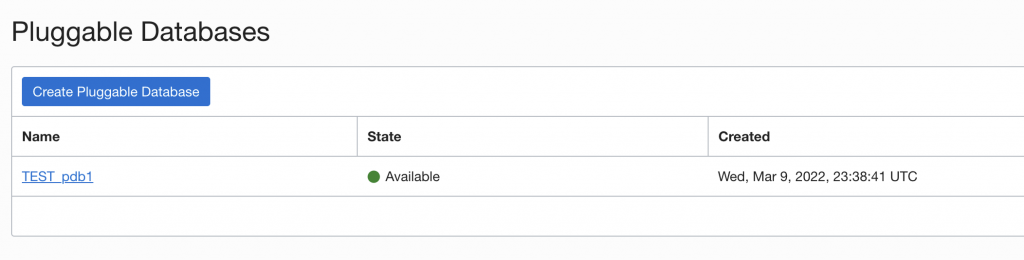

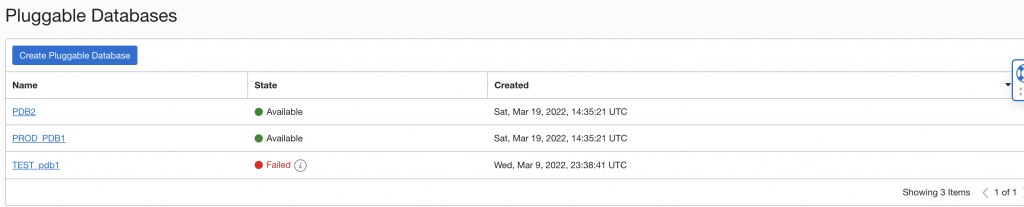

I didn’t immediately see the PDBs reflecting correctly on destination, but after around 15 minutes I could see OCI Console reflecting correct PDBs on the destination database. At the same time, dbcli was showing the correct PDBs from the server side.

[root@test ~]# dbcli list-databases

ID DB Name DB Type DB Version CDB Class Shape Storage Status DbHomeID

---------------------------------------- ---------- -------- -------------------- ---------- -------- -------- ---------- ------------ ----------------------------------------

d1fc8f57-530b-4917-8ca1-d1e8525a6f1e TEST Si 19.14.0.0.0 true Oltp ASM Configured b21428a1-4771-4103-8c13-ea9fd208c2f9

[root@test ~]# dbcli list-pdbs -i d1fc8f57-530b-4917-8ca1-d1e8525a6f1e

ID PDB Name CDB Name Status

---------------------------------------- -------------------- -------------------- --------------------

85e78d18-1e7e-498b-a716-fe458dc8c687 PROD_PDB1 TEST Configured

2e1e45d2-a734-4657-806f-ad451aabb1f1 PDB2 TEST Configured

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PROD_PDB1 READ WRITE NO

4 PDB2 READ WRITE NO

After some time, you can see correct PDBs being listed as available on destination as well. The old TEST_pdb1 shows up as failed since it was deleted.

Summary

ZDM gives you more tools in your toolbox to handle database operations, it’s build for migration activities but there are some use cases like this, where you could take advantage of the prebuilt automation!

ZDM has extremely well orchestrated automation capabilities, it’s always important to know how database works in the background but if there are tools available automating tasks why not use them?

There are some new things what ZDM supports as well, like the integration with Cloud Premigration Analyzer Tool (CPAT), which is the old Schema Advisor when migrating to Autonomous Database.

In the next post, I’ll take a look on setting up Data Guard with ZDM and also how to stop ZDM on specific step. In reality, when you setup DG, you might not want to do switchover until specific time and ZDM has feature to support this!

Thanks for the writeup. I’m looking deeper into this tool for my team.